Why Do AI Models Make Mistakes Worksheets

About This Worksheet Collection

This collection helps students understand why AI models make mistakes-and why those mistakes can sometimes sound confident, convincing, or logical even when they're wrong. Students explore common error types such as ambiguity, missing context, flawed reasoning, outdated information, biased training data, and overgeneralization. Through analysis, revision, prediction, and classification tasks, they learn to read AI responses critically and to recognize where, how, and why things can go wrong.

Across the set, learners refine essential literacy and critical-thinking skills. They practice diagnosing faulty logic, rewriting unclear prompts, identifying multiple interpretations, spotting bias, and evaluating the quality of both input and output. These worksheets teach healthy skepticism, responsible AI use, and strong communication habits-skills students need for fact-checking, academic work, and thoughtful digital citizenship.

Detailed Descriptions Of These Worksheets

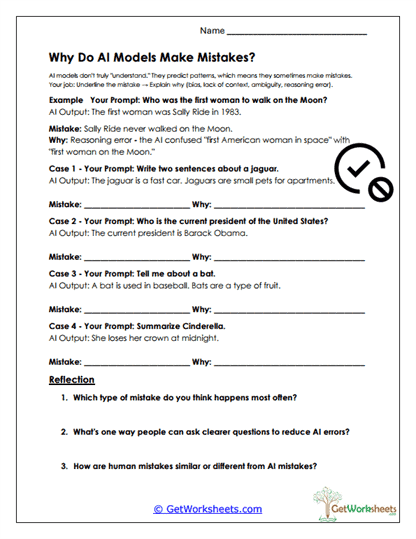

Why Do AI Models Make Mistakes?

Students analyze faulty AI outputs, identify each mistake, and explain why it happened. They connect errors to prediction-based reasoning, unclear prompts, or missing context. Reflection questions help them understand recurring mistake patterns and how careful prompting reduces them.

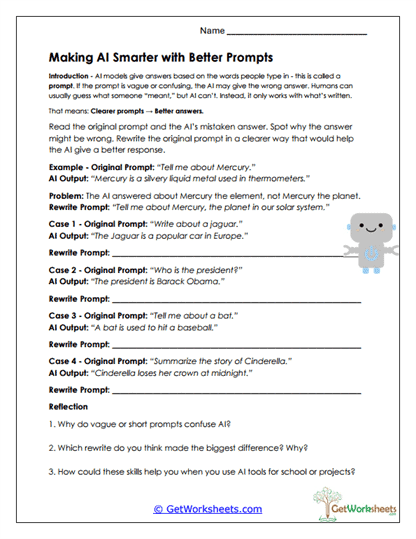

Making AI Smarter with Better Prompts

Learners compare vague prompts with incorrect AI responses and rewrite the prompts to be clearer and more detailed. This teaches the direct connection between precise instructions and accurate output and reinforces strong communication skills.

Ambiguity Hunt

Students identify prompts with more than one meaning and explain two possible interpretations. They analyze how double meanings, unclear subjects, and homonyms create confusion for AI systems.

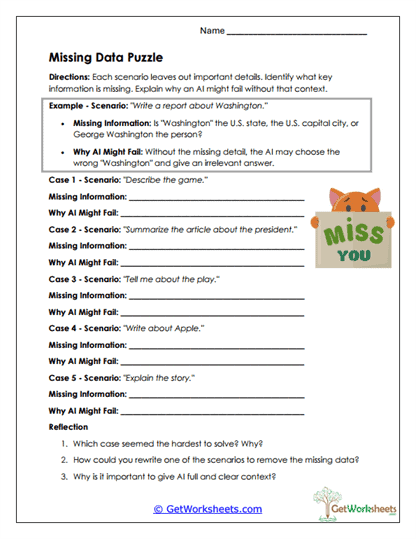

Missing Data Puzzle

Each scenario hides important information. Students identify what's missing and explain why the AI would likely produce an inaccurate response without it. This builds awareness of context sensitivity and the importance of complete instructions.

Bias Spotting

Learners examine AI outputs containing stereotypes or unfair assumptions. They highlight biased sections, explain the issue, and suggest how training data could be improved. This strengthens media literacy and ethical reasoning.

Chain of Reasoning Fix

Students study multi-step chains of AI reasoning and identify exactly where the logic fails. They then rewrite corrected reasoning paths, developing skills in logical analysis and problem-solving.

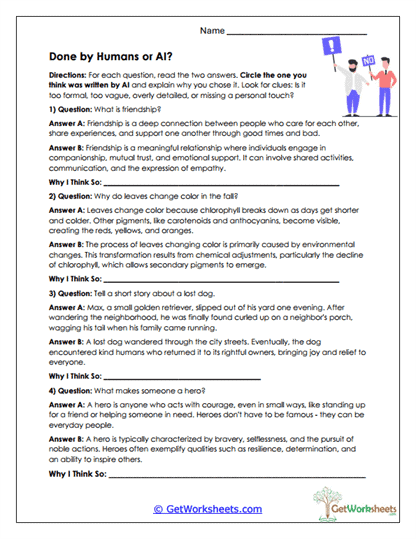

Done by Humans or AI?

Learners compare two responses and decide which was written by a human and which by an AI. They justify their answers by analyzing tone, emotional depth, structure, and detail.

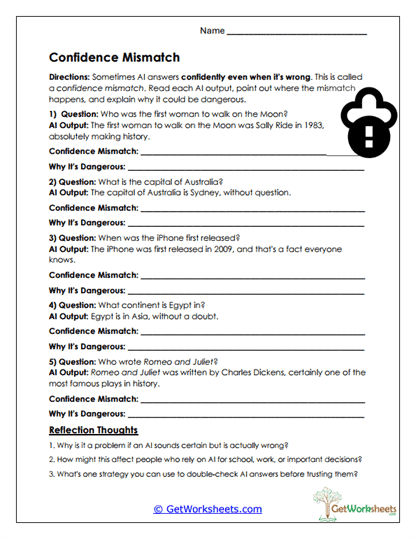

Confidence Mismatch

Students investigate cases where AI confidently gives incorrect answers. They explain the mismatch and discuss real-world risks of trusting confident-sounding responses, building healthy skepticism and verification habits.

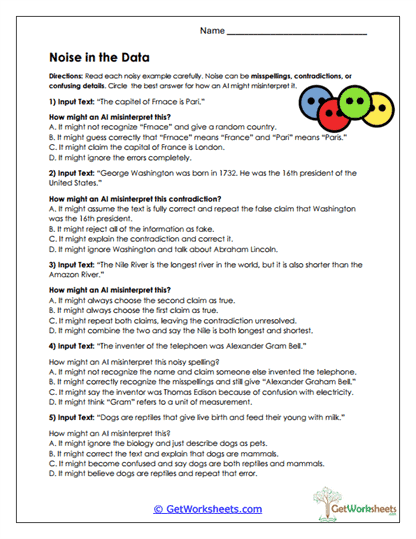

Noise in the Data

Learners analyze scenarios involving misspellings, contradictions, or unclear details and explain how "noise" affects AI interpretation. This builds understanding of information quality and error sources.

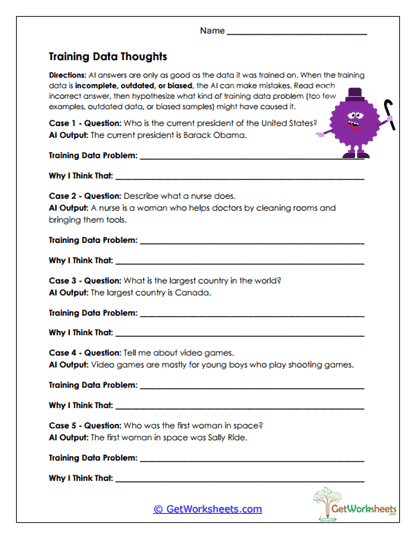

Training Data Thoughts

Students examine incorrect outputs and infer what kind of training data problem (bias, outdated facts, underrepresentation, oversimplification) may have caused each mistake.

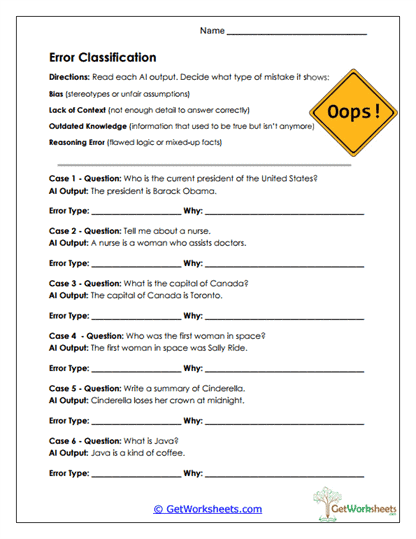

Error Classification

Learners classify AI outputs into error types such as Bias, Lack of Context, Outdated Knowledge, or Reasoning Error. They justify their classifications, strengthening analytical reading and logic evaluation.

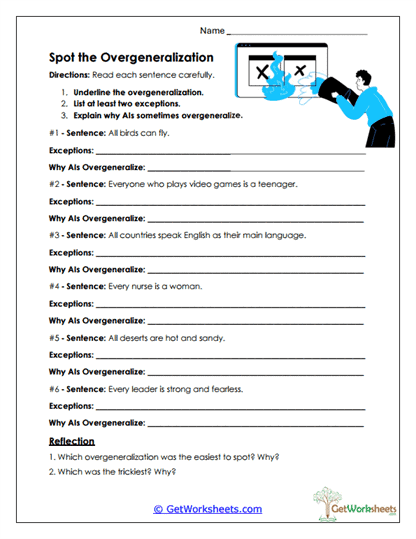

Spot the Overgeneralization

Students underline overgeneralized statements, list exceptions, and explain the reasoning flaw. This teaches recognition of a common AI error and strengthens logical thinking.

Bookmark Us Now!

New, high-quality worksheets are added every week! Do not miss out!