How LLMs Work Worksheets

About This Worksheet Collection

This collection introduces students to the core ideas behind how large language models (LLMs) understand, predict, and generate text. Through hands-on activities, learners experience simplified versions of the strategies AI systems use-such as pattern recognition, context awareness, token prediction, and style adaptation. Each worksheet breaks down a complex concept into an accessible literacy or reasoning task, helping students see how familiar language skills directly connect to modern AI technology.

As they work through the activities, students practice inference, sequencing, contextual reasoning, editing, and creative writing. They also explore how prediction changes when more information is added, how patterns guide language flow, and how multiple valid continuations can exist for the same sentence. By connecting human language abilities to AI mechanisms, this collection builds foundational AI literacy while strengthening core reading and writing skills.

Detailed Descriptions Of These Worksheets

Tracking Information

Students read a short narrative and use earlier clues to fill in missing details, mirroring how LLMs track information across long text. They answer comprehension questions that reinforce attention to names, objects, and events. This worksheet builds inference skills and strengthens students' ability to follow continuity throughout a passage. It also supports detailed recall and story understanding.

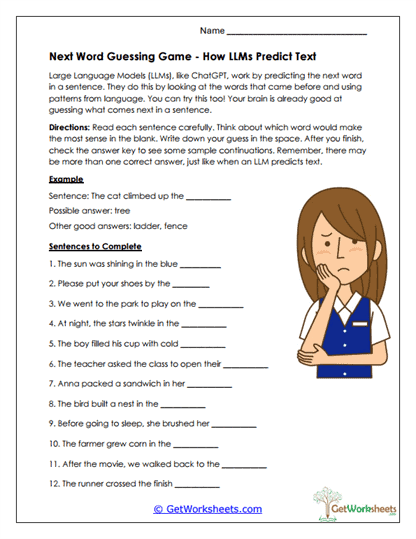

Next Word Game

Learners complete open-ended sentence starters to explore how context shapes prediction. They compare different possible completions to understand that many options can be reasonable depending on tone and meaning. The activity encourages creativity while demonstrating the idea of probability in language patterns.

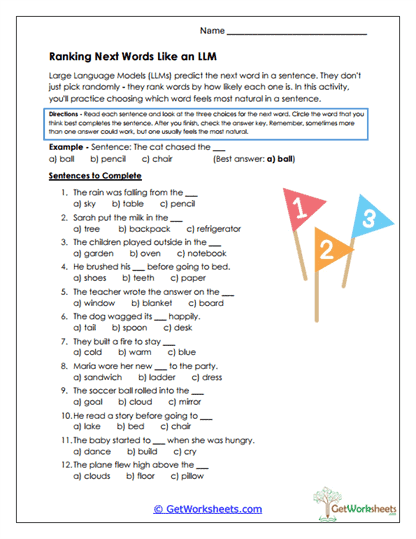

Ranking Next Words

Students choose the most natural next word from three options in each sentence, modeling how LLMs rank likely completions. This task sharpens awareness of grammar cues and phrasing that signal which word fits best. It deepens understanding of how language flows and supports skills in eliminating unlikely choices. Learners gain insight into the probability-based nature of AI predictions.

Word Likelihood Ranking

Students classify three possible next words as most likely, less likely, or unlikely given the context. They justify their decisions, prompting deeper reflection on subtle shifts in meaning. This activity highlights how context drives prediction and strengthens reasoning about vocabulary choices.

Missing Tokens

Learners fill in missing words removed from short passages, using context to determine the most fitting options. Each sentence contains two blanks, encouraging students to consider how pairs of words work together. The exercise improves cloze-reading skills and illustrates how AI models predict missing tokens from surrounding text.

Story Token Builder

Working with a partner, students build a story one word or phrase at a time without knowing where it will end. This models how LLMs generate text sequentially rather than planning full narratives in advance. The worksheet includes reflection questions that help students connect the activity to AI text-generation processes. It also encourages creativity and improvisation.

Context Change Predictions

Students predict a sentence continuation twice: once without added context, and once with a specific setting such as a fairy tale or video game. This demonstrates how context dramatically shifts meaning and expectation. The activity strengthens flexible thinking and encourages awareness of semantic changes caused by different scenarios.

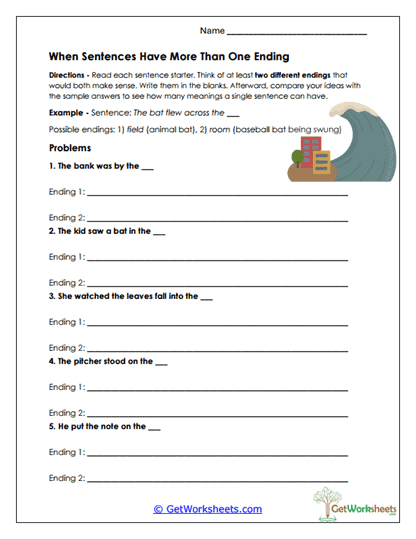

Multiple Endings

Learners explore how a single sentence starter can reasonably lead to multiple correct endings. They write at least two completions for each prompt, emphasizing ambiguity and linguistic variety. This worksheet builds creative writing skills while showing that AI models consider many valid continuations.

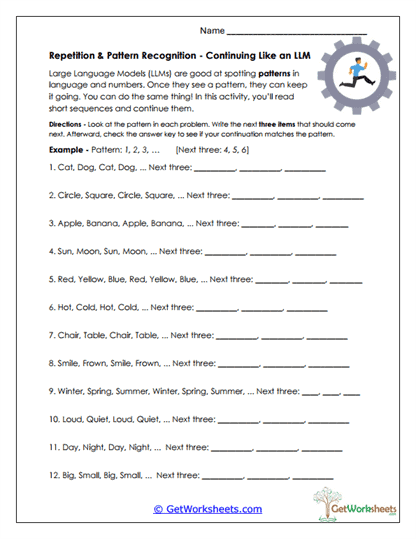

Pattern Recognition

Students extend simple patterns-such as opposites, cycles, or logical sequences-to see how AI systems use repetition when predicting text. The activity connects mathematical sequencing with language comprehension. It strengthens logical reasoning and supports students in recognizing relationships between concepts or words.

Fixing Noisy Text

Learners repair sentences that contain scrambled or partially corrupted words. They use context clues to determine what each damaged word should be. The activity builds decoding and editing skills and shows how AI models reconstruct incomplete or noisy input. It reinforces careful reading and language reconstruction.

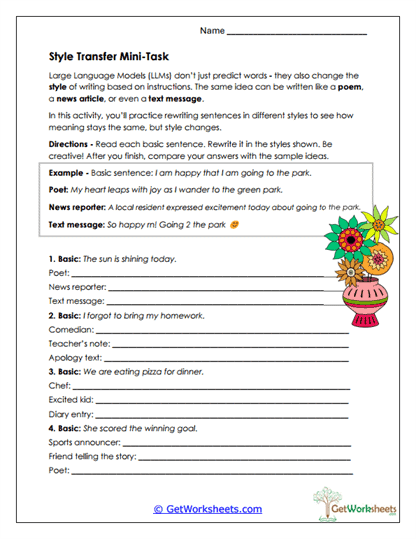

Style Transfer Task

Students rewrite the same base sentence in several different styles, such as poetry, diary entries, or news reports. The worksheet highlights how tone, format, and voice shape meaning even when the core idea stays the same. It encourages creativity and improves students' ability to adjust writing for different audiences. Learners also gain insight into how AI performs style transfer.

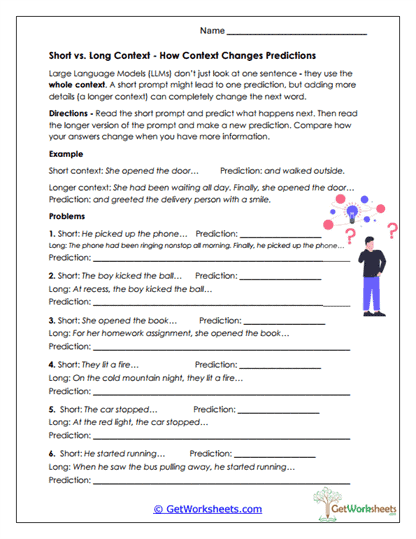

Short vs. Long Context

Students predict what comes next from a short prompt and then revise their prediction after reading a longer, more detailed version. This illustrates how added context narrows possibilities and improves accuracy. The activity encourages students to reconsider conclusions when new information appears and shows how LLMs rely on larger context to generate clearer output.

Bookmark Us Now!

New, high-quality worksheets are added every week! Do not miss out!